Influential Papers

A curated collection of academic papers and articles that influence my research and thinking across various domains.

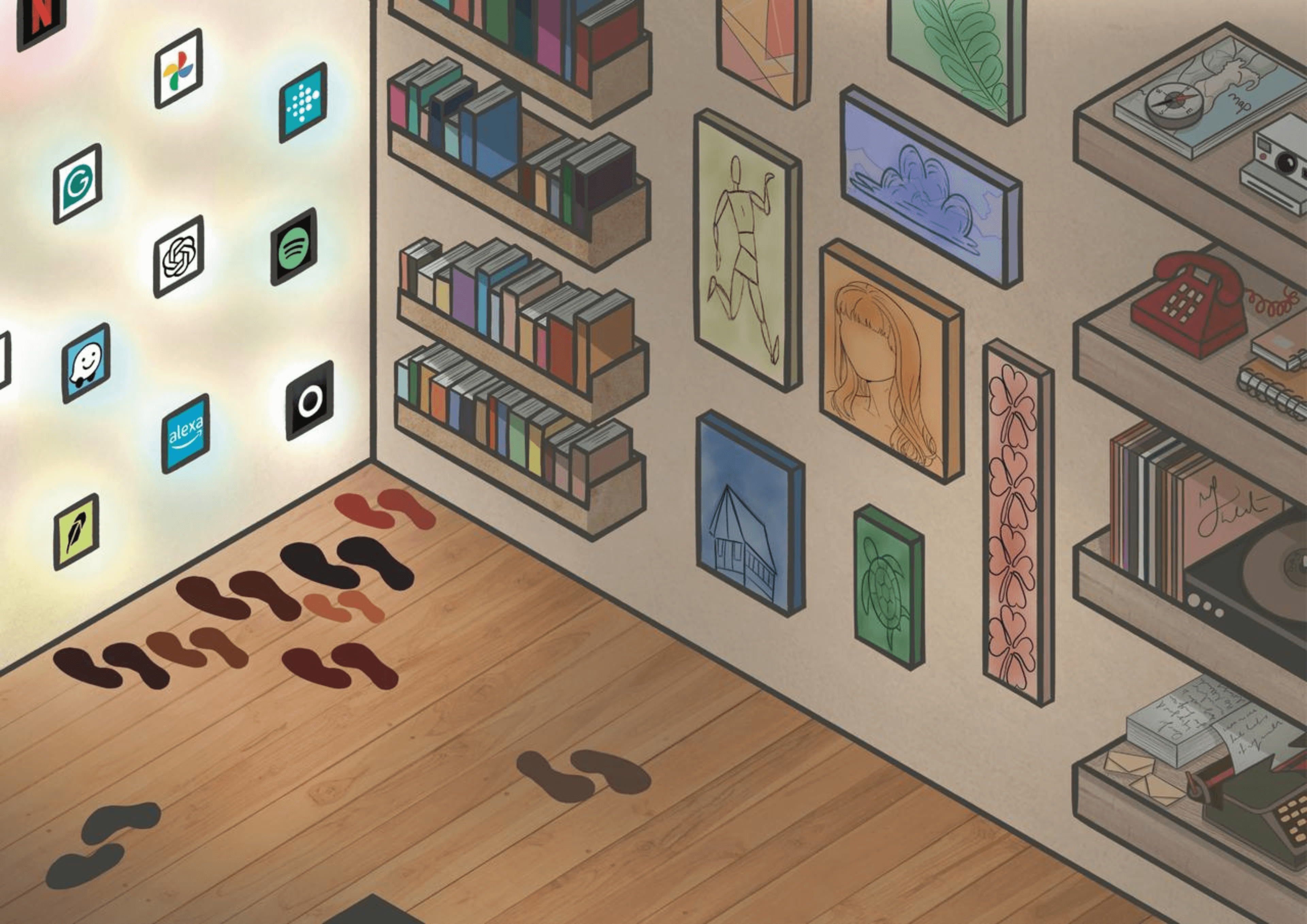

Google and TikTok rank bundles of information; ChatGPT ranks grains.

Nick Vincent

Interesting analysis that Google, Tiktok, and ChatGPT are all ranking information, but the former two rank more bundled information than the latter. Bundled information has clearer economic, social, and institutional properties, such as notions of originality, labor, etc. Many 'fixes' to AI involve bundling information. Information-bundling and -splitting is therefore relevant for thinking about the economics of AI.

Super interesting and illuminating perspective explaining why supposedly deep-learning-unique phenomena like deep double descent, overparametrization, etc. can be explained using soft inductive biases and existing generalization frameworks. The references are a treasure trove!

Self-reports are better measurement instruments than implicit measures

Olivier Corneille, Bertram Gawronski

Challenges the assumption that implicit measures are superior to self-reports in psychological research. Argues that self-reports demonstrate greater reliability, stronger predictive validity for both deliberate and spontaneous behaviors, and unmatched flexibility in exploring complex psychological constructs.

Cognitive Behaviors that Enable Self-Improving Reasoners

Kanishk Gandhi, Ayush Chakravarthy, Anikait Singh, Nathan Lile, Noah D. Goodman

Identifies four cognitive behaviors (verification, backtracking, subgoal setting, backward chaining) that predict whether a model can self-improve via RL. The key finding is striking: it's the presence of reasoning behaviors, not answer correctness, that matters. Models exposed to training data with proper reasoning patterns -- even incorrect answers -- matched the improvement of models that had these behaviors naturally. A useful framing for thinking about what 'reasoning' actually is in these systems.

Proofs and Refutations: The Logic of Mathematical Discovery

Imre Lakatos

Uses an extremely entertaining and well-focused example of understanding the 'V - E + F = 2' Euler characterization of polyhedra to illustrate how mathemematics develops -- dialectically between criticism (proof-analysis, refutation, counterexamples) and development (proof, lemma-incorporation, etc.). Entertainingly written as a Socratic conversation among a classroom of incredibly bright students.

Towards Monosemanticity: Decomposing Language Models With Dictionary Learning

Trenton Bricken*, Adly Templeton*, Joshua Batson*, Brian Chen*, Adam Jermyn*, et al.

Really incredible work on discovering and visualizing feature decompositions of neuron layers with sparse autoencoders. Gorgeous visualizations and interfaces, and thoughtful reflections on interpretability methodology. I am a big fan of this publication style.

Towards Bidirectional Human-AI Alignment: A Systematic Review for Clarifications, Framework, and Future Directions

Hua Shen, Tiffany Knearem, Reshmi Ghosh, Kenan Alkiek, Kundan Krishna, et al.

A recognition that not only must AI 'align to' human (values, behavior, knowledge, etc.) (-- whatever this means), but we also need to think about how humans might 'align' to AI by working with AI-structured systems. This paper recognizes social 'looping effects' brought about by AI and its behavior.